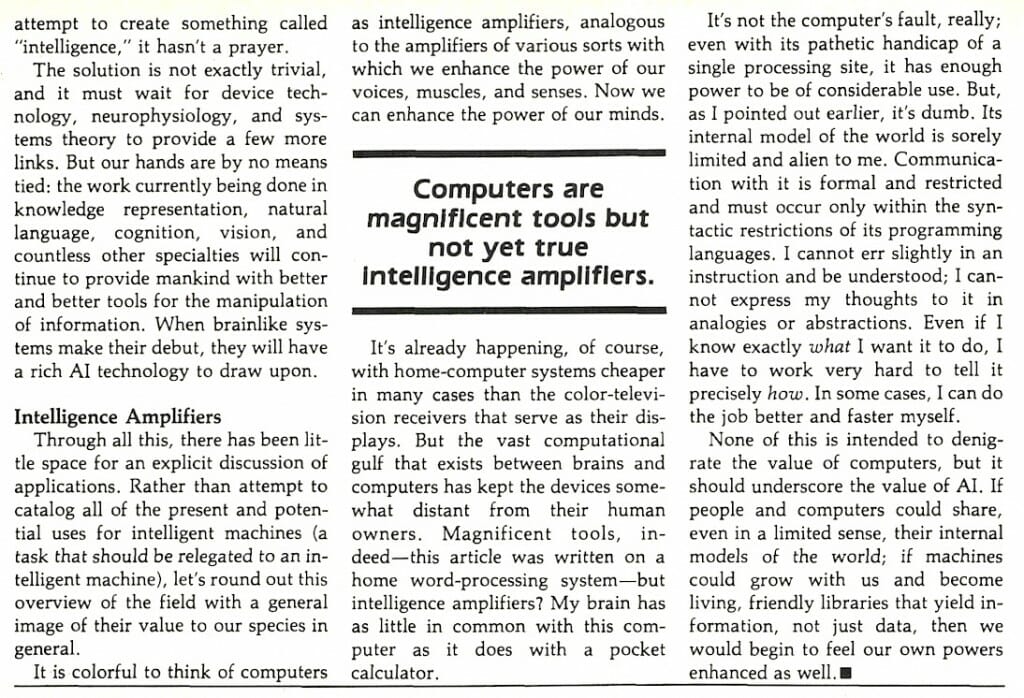

Artificial Intelligence – Byte

This was my first substantial essay on AI, and fell out of an intensely stimulating two-week adventure at Stanford University that included the first International Conference on Artificial Intelligence, a LISP conference, schmoozing with some truly amazing authors, hanging out at Xerox PARC for an evening, and generally getting my brain expanded during every waking moment. It probably helped that this was in very sharp contrast to my midwest existence; even though I was doing lots of fun stuff with microprocessors, it was a rather lonely pursuit. Hobnobbing in Silicon Valley academia, even as a dilettante, was eye-opening and in some ways even life-changing.

by Steven K. Roberts

BYTE

September, 1981 cover story

What is intelligence? This question has inspired great works for centuries. It has furrowed the learned brows of philosophers, psychologists, theologians, and neurophysicists as they have sought, in different ways, to find the answer. Until recently, the question has remained more or less outside the domain of technology. Only in science fiction has the notion of intelligence applied to machines.

But man is a restless creature— thanks to his intelligence—and has a remarkable propensity for tool-building. The physical limitations of the human body are overcome daily with the use of man-made tools: bulldozers, microscopes, telephones, pens, and thousands of other devices. Very near the top of any list of tools must be the computer.

Computers, as most people know and love them, are hardly worthy of the term “intelligence.” At best, they are fast and reliable (but abysmally stupid) machines that take very precisely defined tasks and tirelessly perform them over and over. This, of course, makes them invaluable in a fast-paced technological society such as ours, for we have become addicted to freedom from boring repetitive mental drudgery. (When was the last time you calculated a square root the old-fashioned pencil-and-paper way?) But for all their usefulness in assisting our many and varied efforts, computers are still absolutely uninspired contraptions.

In addition to being incurable tool-builders, mankind also has a passion for information — lots of it. There seems to be no end to the exponential growth of human knowledge (it’s currently expanding at the approximate rate of 200,000,000 words per hour). On countless subjects ranging from the weather to the ills of our flesh, from computer design to the technology of war, mankind has accumulated such masses of information that only the narrowest of specialists in any field can truly claim to be an expert.

This, however, creates problems, because now that we have all this information, we need to use it. The obvious difficulty is simply providing access to such a large library of knowledge: a person attempting to locate one small fact can easily become bogged down in searching if the library is not extremely well organized and cross-referenced. A less obvious problem is the continual addition of new knowledge to the library without creating a nightmarish jumble of patches and outdated material.

The sheer quantity of information involved in such an effort cries out for a computer solution. After all, hardware can be purchased off the shelf that provides literally billions of words of data storage—certainly enough for most “task-specific” information domains. But here we can see the need for something other than traditional data-processing techniques. (For an example of information storage using traditional techniques, see “Information Unlimited: The Dialog Information Retrieval Service,” by Stan Miastkowski, June 1981 BYTE, page 88.)

Types of Knowledge

Knowledge about almost anything can be split into two major classifications: factual and heuristic. Factual knowledge is the most obvious and needs little elaboration; it’s often called “textbook knowledge.” The heuristic variety, on the other hand, is a little harder to store in a computer. It is the network of intuitions, associations, judgment rules, pet theories, and general inference procedures that, in combination with factual knowledge about a field, allow mankind to exhibit intelligent behavior. (Further muddying the programming waters is a higher level of knowledge that can be included within the heuristic category: “meta-knowledge,” which is concerned with general problem-solving strategy and such esoterica as awareness of how to think.)

Factual knowledge has been resident in computer systems for decades. Business systems containing records of customer, personnel, inventory, and accounting data typify the rather pedestrian uses to which the majority of large systems have been relegated. If most of the world’s computers suddenly became self-aware, they would be terribly bored with their fates.

Heuristic knowledge is substantially more difficult to represent in a program or data base than simple factual data. But any system that is intended as a sophisticated information resource must, in some fashion, incorporate this higher level of knowledge, if for no other reason than to reduce the problem of finding a given piece of information to one of manageable proportions.

Suppose, for example, that a system were created to provide physicians with clinical advice about certain infectious diseases. A mere listing somewhere in the computer’s memory and storage of all the known facts is essentially useless—the only way one could make use of the data would be the simplistic matching of a set of symptoms against sets of indications for each disease.

This kind of approach is doomed from the start: some symptoms are less suggestive of certain diseases than others. Therefore, the system should have the ability to order specific additional tests (giving preference to non-invasive ones) before attempting a diagnosis. Also, the patient’s age, environment, and medical history must be taken into account. Amidst all this, there must be the capability of ignoring certain facts if they are inconsistent with the most strongly suggested diagnosis—a patient’s tennis elbow, for example, is probably unrelated to his or her infectious meningitis.

If the machine is to be more useful than a textbook, it must be able to do all these things, as well as provide a facility for updating its own information as often as required. In summary, it must possess a measure of intelligence. Such a system is not mere conjecture, by the way. One has already been created to provide diagnosis and therapy selection for two major types of diseases: blood infections and meningitis. Developed at Stanford University by doctors Bruce Buchanan and Edward Shortliffe, the program, called MYCIN, has outperformed human diagnosticians in the identification and treatment of diseases in this class, not only through its accuracy in pinpointing the pathogen, but in its avoidance of over-prescribing treatment.

This last accomplishment is especially noteworthy, because the standard clinical approach to an unknown disease involves a broad-spectrum antibiotic attack on a wide variety of possibilities. This not only exposes the patient to potential toxic effects, but encourages the development of drug-resistant bacterial strains. (A recent Stanford University study revealed that one of every four persons in the United States received penicillin under a doctor’s orders in 1977 and that nearly 90% of these prescriptions were unnecessary.)

A Technology Is Born

For all of these reasons, along with many others ranging from the inadequacy of standard programming techniques to the sheer joy of research, computer science has spawned a new discipline: artificial intelligence (AI).

Actually, AI is not all that new: some of the foundations that underlie today’s work were laid in the late 1940s and early 1950s by Alan Turing, whose “imitation game” (today called the ‘Turing Test”) is still considered a valid method for determining whether or not a machine is intelligent. In essence, the Turing Test consists of an interrogator communicating via teleprinters with a human and a computer. The interrogator can attempt in any way possible to determine which is which through conversation over the communication links.

At first glance, it might seem that the examiner could easily tell the difference by asking such questions as, “What is 35,289 divided by 9117?” The human would presumably chew on it for a while, and the computer would instantly spit back an answer correct to twelve digits. The flaw in this kind of thinking is that the human might have an electronic calculator in his pocket and the computer, if indeed intelligent (and devious), might give a slow and erroneous answer just to fool the interrogator. Also, the computer might be unable to calculate as rapidly as we would expect, since much of what we call intelligence involves the storage of information in a relatively abstract and very symbolic form. It is possible that such a machine would have to go through a set of thought processes not markedly different from ours to do mathematical calculations, though for the sake of convenience, it would probably have a built-in “calculator.”

Turing’s work in this area was strangely prophetic and, for the conservative 1950s, somewhat radical. He wrote, “I believe that at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.”

Once again, technological progress is ahead of schedule—maybe. One of the distinguishing and provocative features of AI is that newer and ever more complex problems lurk behind each breakthrough. Most technologies reach maturity when progress becomes asymptotic: continued effort brings us closer and closer to the limits of what is possible but at an ever slower rate. (An example of this is the ongoing effort to make electronic logic devices switch faster. The time it takes for electrons to move from one place to another defines an immutable speed limit, and future performance increases must come from another source.) AI doesn’t seem to have such a limit, or if it does, it is (by definition) even further removed from our present comprehension than a complete picture of how the brain works.

This limitlessness makes AI, for many people, the most enchanting field of endeavor in the vast panoply of research fields. In the thirty years since Turing wrote his prophetic words, AI has grown from an esoteric part-time pursuit of a few visionaries to a full-fledged science, replete with subspecialties, societies, annual international conferences, and journals. Its existence is beginning to be felt outside academia, and in a few years, the computer as we know it is likely to be dramatically transformed.

Work in Progress

There are a number of robust sub-specialties in the world of artificial intelligence, dealing not only with various applications but with several problems that must be simultaneously overcome for the dreams of Turing and many others to be fulfilled. The two central problems are so closely intertwined that they can be discussed together: knowledge representation and natural language.

Consider the following conversation:

He: “Hungry?”

She: “I have a coupon for McDonald’s.”

He: “Have you seen my keys?”

She: “Look on the dresser.”

There are some very sophisticated information-processing operations going on here. In this dialogue, most of the real meaning—the real communication—is not explicitly stated. He opens by inquiring whether she is hungry and, in the process, is probably implying that he is hungry as well. She processes this and issues a very cryptic response. Not only does she inform him that she is either hungry or willing to go along for a ride, but also suggests a specific place to eat and, further, hints at economic realities by weighting the selection of a restaurant on the basis of a discount coupon. Her statement assumes that he will understand what a coupon is as well as what a McDonald’s is. His next question indicates even deeper communication: he has agreed with her about the choice of restaurant and suggests a specific mode of transportation. This suggestion, however, is made in a roundabout fashion: he asks if she knows where his keys are at the moment, assuming that she knows not only what keys are but that they are linked with transportation. She, of course, understands that the keys he’s talking about are those of his automobile and suggests a course of action that will solve the transportation problem—correctly assuming that he will not only know which dresser she means, and that a dresser is a piece of furniture, but that he will deduce that the keys must be there.

The implication is that communication between two people involves substantially more than the lexical meanings of the words. The conversation above would not have been so succinct if he had approached a stranger on the street with the same question. The difference suggests the existence of a special relationship between he and she: they share certain aspects of their internal models of the world.

This highlights a crucial truth: language has to be considered as only one part of a much more complex communication process, one in which the knowledge and states of mind of the participants are as much responsible for the interpretation of verbal utterances as are the words from which those utterances are formed. As a conversation progresses, the internal state of each participant continually changes to represent the modified reality that is the result of the communication.

(Frequently, problems occur between people when their respective internal models of the world differ sharply. “I had to work late,” can be interpreted in a drastically different way from that intended by the speaker.)

When one attempts to build an intelligent machine, the complexities introduced by this larger view of communication can be surprising. Early systems were developed without a clear awareness of the problem and were constructed of a stored body of facts with associated keywords that were used (eg: Joseph Weizenbaum’s ELIZA program) to scan the input messages. Whenever there was a match, sets of specific rules were invoked to produce a response based on both the system’s knowledge and the keywords it had located. No attention was given to the actual meaning of the sentences, just to the presence of certain words. Such systems quickly fail the Turing test.

As time went on, it was recognized that the communication problem is interwoven with knowledge itself. In the mid-1960s, programs were developed to translate input sentences into an internal formal language that, theoretically, would allow the system to perform inferences without needing to handle all the subtleties of ordinary conversation. But the knowledge and the meanings of words were still represented as passive data “objects” distinct from the program itself. Thus, it was difficult for any but the most rudimentary changes to occur in the system’s internal model of the world.

Recently, a different approach has begun to show promise. Instead of clear differentiation between the “intelligent program” and the knowledge, the programs actually embody the knowledge in their structure. With the existence of powerful AI languages (such as LISP), it is possible for the system to learn and grow by modifying itself.

This all sounds very anthropomorphic, but there is still a vast gulf between our minds and even the best of the artificially intelligent systems. Though we have the technology to provide an equivalent amount of raw data storage, we may be going about it all wrong.

Serial Versus Parallel

There are numerous computational feats that humans manage to accomplish daily without conscious effort. Many of them are still impossible for computers. Take pattern recognition, for example. When a friend walks into the room, you can establish his or her identity with a casual glance. The accuracy of your decision is not markedly affected by the set of the jaw, the tilt of the head, or disheveled hair.

According to current theories, you simply map a preprocessed visual image via some feature-extraction “hardware” onto a gigantic multidimensional associative memory. The answer pops out, linked with an elaborate internal model of your friend. Big deal.

A computer, on the other hand, has quite a chore to perform when it is fitted with a television camera and directed to recognize a face. It must scan the image raster dot by dot to acquire a numeric representation in memory. Then, it must engage in fast and furious number-crunching to calculate the spatial Fourier transform of the face. Elapsed time at this point might be pushing a minute or more, and the machine still hasn’t the foggiest notion of who it’s looking at. Then comes the hard part: one by one, the system must perform two-dimensional correlations between its freshly calculated data and blocks of stored image data corresponding to the people it “knows”—in each case, coming up with a number (the correlation coefficient) between 0 and 1 that expresses how much like a stored image the current image is. The stored image with the highest coefficient is deemed to be the one that matches.

But, if the person in front of the camera parts his hair differently, cocks his head to one side, and takes on a dramatic expression, then he might as well have just become someone else.

This problem gives specialists in image recognition fits. If a computer’s logic devices can switch as much as a million times faster than human neurons, it would seem that even intensive tasks such as pattern recognition could be done with correspondingly greater speed, even if not with ease.

Not so. Here’s the catch, and its solution will probably represent the next major revolution in computer design: Brains don’t center around single devices called “processors.” Computers do. Operations that the brain seems to perform with the simultaneous activation of millions of widely distributed logic elements must be performed in a computer by funneling the entire task through one tiny bottleneck. In many cases, the blinding speed of computer hardware more than makes up for this handicap (in calculating, sorting, etc) but in the types of problems encountered in the attempt to create something called “intelligence,” it hasn’t a prayer.

The solution is not exactly trivial, and it must wait for device technology, neurophysiology, and systems theory to provide a few more links. But our hands are by no means tied: the work currently being done in knowledge representation, natural language, cognition, vision, and countless other specialties will continue to provide mankind with better and better tools for the manipulation of information. When brain-like systems make their debut, they will have a rich AI technology to draw upon.

Intelligence Amplifiers

Through all this, there has been little space for an explicit discussion of applications. Rather than attempt to catalog all of the present and potential uses for intelligent machines (a task that should be relegated to an intelligent machine), let’s round out this overview of the field with a general image of their value to our species in general.

It is colorful to think of computers as intelligence amplifiers, analogous to the amplifiers of various sorts with which we enhance the power of our voices, muscles, and senses. Now we can enhance the power of our minds.

It’s already happening, of course, with home-computer systems cheaper in many cases than the color-television receivers that serve as their displays. But the vast computational gulf that exists between brains and computers has kept the devices somewhat distant from their human owners. Magnificent tools, indeed—this article was written on a home word-processing system—but intelligence amplifiers? My brain has as little in common with this computer as it does with a pocket calculator.

It’s not the computer’s fault, really; even with its pathetic handicap of a single processing site, it has enough power to be of considerable use. But, as I pointed out earlier, it’s dumb. Its internal model of the world is sorely limited and alien to me. Communication with it is formal and restricted and must occur only within the syntactic restrictions of its programming languages. I cannot err slightly in an instruction and be understood; I cannot express my thoughts to it in analogies or abstractions. Even if I know exactly what I want it to do, I have to work very hard to tell it precisely how. In some cases, I can do the job better and faster myself.

None of this is intended to denigrate the value of computers, but it should underscore the value of AI. If people and computers could share, even in a limited sense, their internal models of the world; if machines could grow with us and become living, friendly libraries that yield information, not just data, then we would begin to feel our own powers enhanced as well.

About the Author

Steven K Roberts is a free-lance writer and microprocessor-systems consultant living in Dublin, Ohio. He is the author of Micromatics (published by Scelbi Publications) and Industrial Design with Microcomputers (to be published in early 1982 by Prentice-Hall).

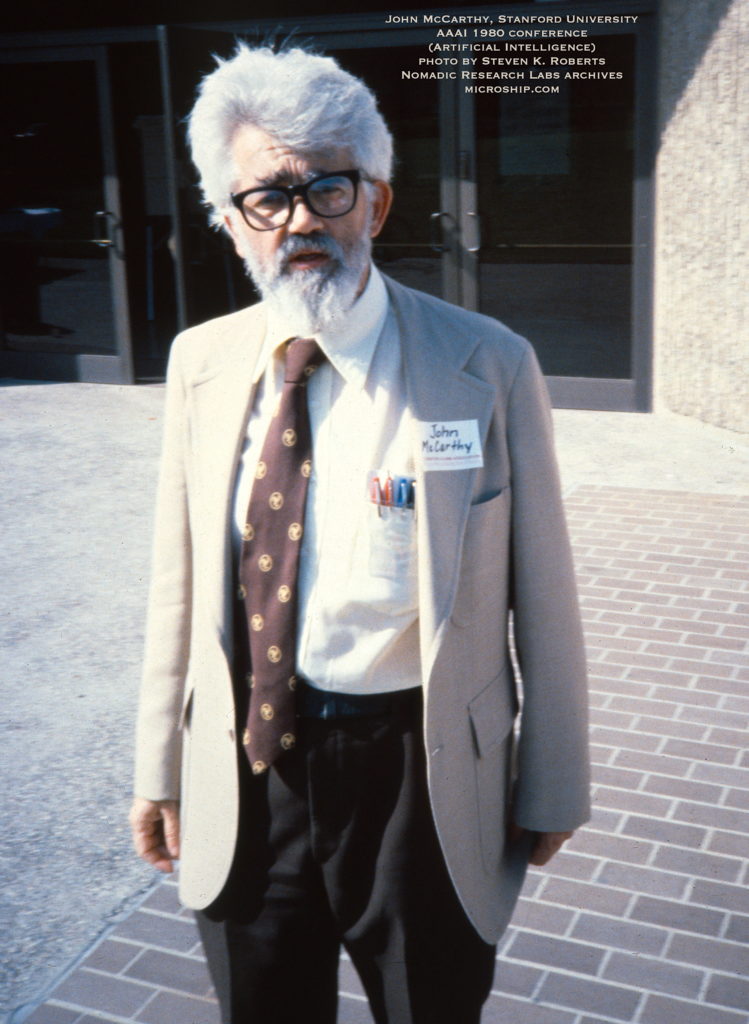

Research for this article included attendance at the First International Conference on Artificial Intelligence, held at Stanford University in August 1980.