Artificial Intelligence – Online Today

Between 1980 and 1983, before venturing off on a life of technomadics, I had the good fortune to become a sort of cutting-edge dilettante. Every few months, I would jet to an academic conference with a press pass, spend a few days hanging with the gurus of a new microculture, then return with a head full of ideas to translate the experience into feature articles in the popular computing press. This was seriously fun, and one of my favorites was the Artificial Intelligence community… rife with wizards in all sorts of intriguing subspecialties: natural language, machine vision, expert systems, inference engines, LISP, and more.

But the AI world of that era had a PR problem. Gushing news stories would over-hype the reality, while researchers in the field would publish inaccessible papers in their incestuous journals…all of which was leading to considerable public cynicism. I have no way of knowing how much I was able to offset that, but I wrote a variety of articles that attempted to explain some of the issues while treading the line between breathlessness and academic third-person boring. It’s fun now to look back at those and see where I got it wrong… and right.

Though this one wasn’t as high-profile as my cover story in Byte or others in the business press, I like it better… I had much more editorial freedom to play with ideas. The piece has an interesting take on corporate personhood, now more of an issue than ever, along with some thoughts in the online-publishing domain.

Artificial Intelligence:

Networking’s New Frontier

by Steven K. Roberts

Online Today

February, 1984

Ours is an industry of change. Memory densities double every few seasons; microcomputer execution speeds of millions of instructions per second no longer seem astounding, and the announcement of new machines is a daily occurrence. Yet rarely does anything really shake the industry’s foundations, for seldom are the changes much more than ongoing refinements (however exquisite) of old concepts.

That observation may raise the hackles of the field’s many wizards, but it is not a criticism. It’s just that we’re still building machines around ideas that were formulated 40 or 50 years ago. We’re doing it very well, of course, with a high-tech panache that renders the computing contraptions of five years ago crude by comparison, but still, we’re plodding along in a philosophical rut.

Nevertheless, every now and then something startling emerges from our collective technological consciousness — something that changes the world and spawns new industries. Such a thing was the transistor; such a thing was the microprocessor.

Such a thing is artificial intelligence.

Brains: natural, artificial

Brains and computers are radically different. This is unfortunate for we would like computers to do many of the things that brains do. We have little trouble turning them loose on clearly-defined data manipulation tasks, but they have not proven very useful for problems involving insight, goal-direction, ambiguous specifications, ill-defined objectives, general world knowledge, or wit.

The reasons for this are fairly obvious. Computers of the traditional variety depend for their operation on the sequential execution of a set of instructions. No matter how cleverly these instructions are contrived and no matter what their collective intent, the result is an inescapable limitation: The machine has no choice but to fetch one datum at a time from memory and then trundle off with it to turn some primitive logical crank. It can do this with impressive speed, making remarkable performance possible if the program is elegant enough, but it is nevertheless forced to funnel all activity through that tiny bottleneck.

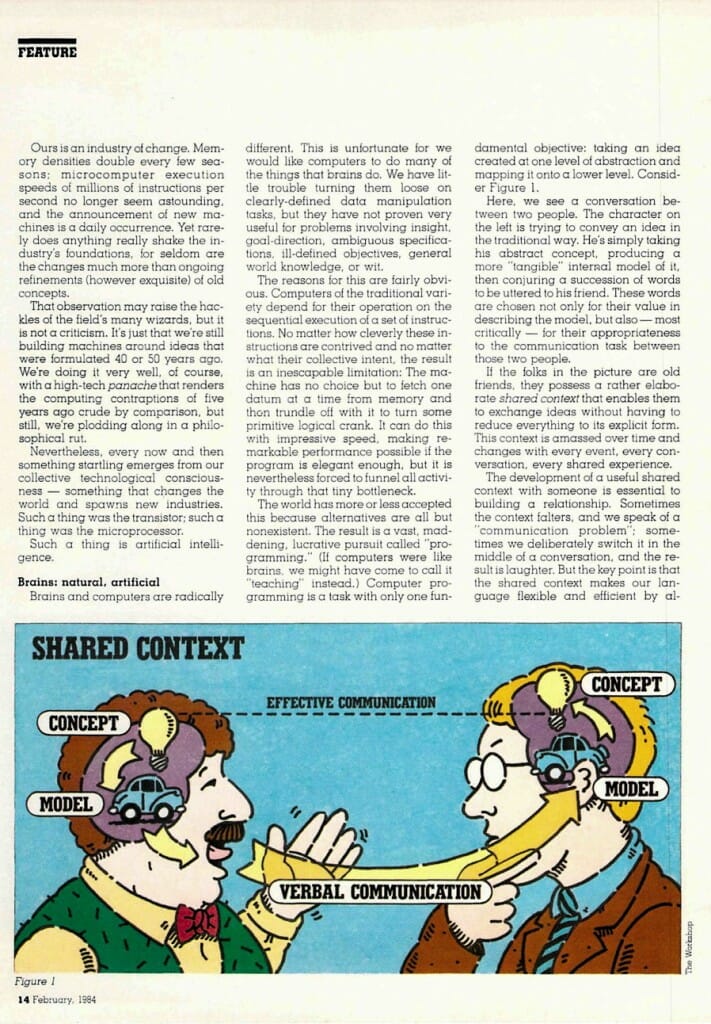

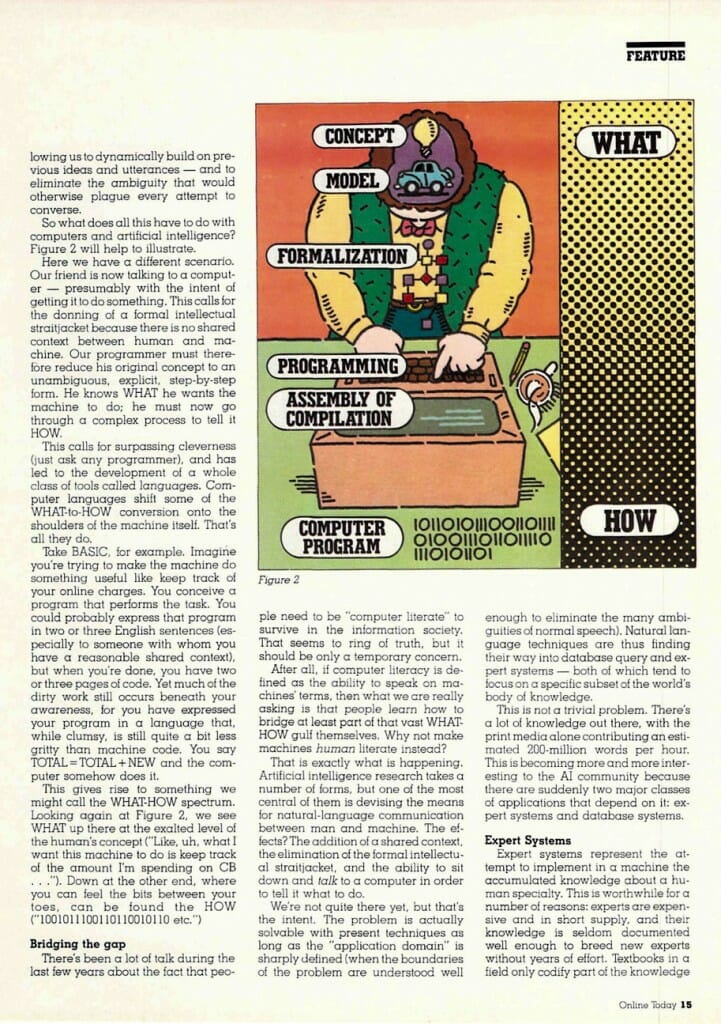

The world has more or less accepted this because alternatives are all but nonexistent. The result is a vast, maddening, lucrative pursuit called “programming.” (If computers were like brains, we might have come to call it “teaching” instead.) Computer programming is a task with only one fundamental objective: taking an idea created at one level of abstraction and mapping it onto a lower level. Consider Figure 1.

Here, we see a conversation between two people. The character on the left is trying to convey an idea in the traditional way. He’s simply taking his abstract concept, producing a more “tangible” internal model of it, then conjuring a succession of words to be uttered to his friend. These words are chosen not only for their value in describing the model, but also — most critically — for their appropriateness to the communication task between those two people.

If the folks in the picture are old friends, they possess a rather elaborate shared context that enables them to exchange ideas without having to reduce everything to its explicit form. This context is amassed over time and changes with every event, every conversation, every shared experience.

The development of a useful shared context with someone is essential to building a relationship. Sometimes the context falters, and we speak of a “communication problem”; sometimes we deliberately switch it in the middle of a conversation, and the result is laughter. But the key point is that the shared context makes our language flexible and efficient by allowing us to dynamically build on previous ideas and utterances — and to eliminate the ambiguity that would otherwise plague every attempt to converse.

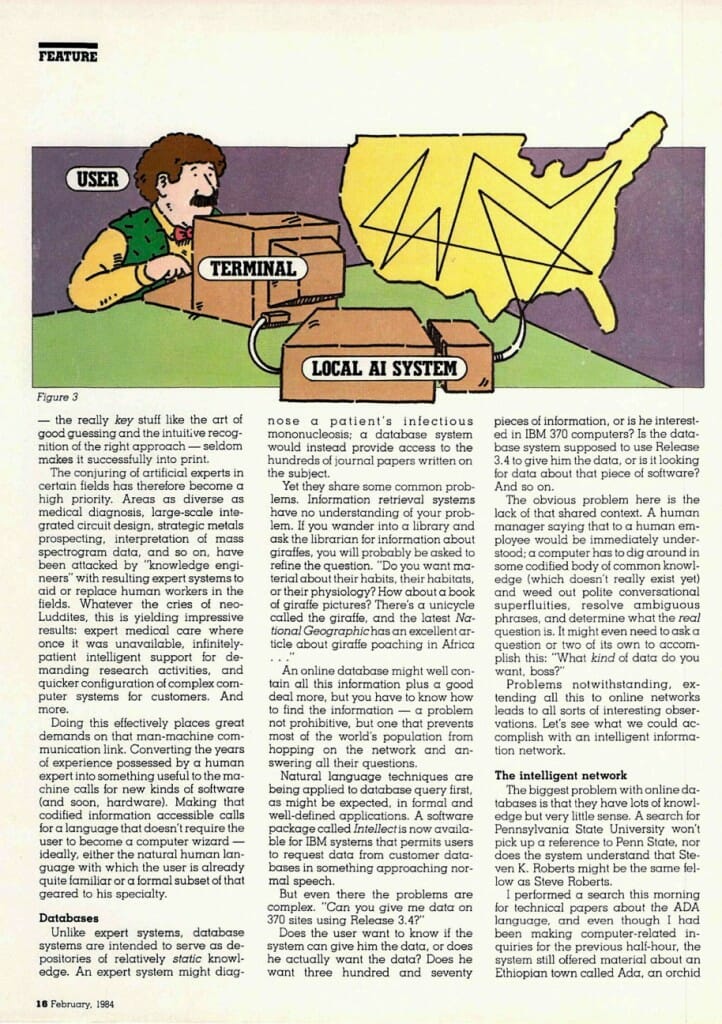

So what does all this have to do with computers and artificial intelligence? Figure 2 will help to illustrate.

Here we have a different scenario. Our friend is now talking to a computer — presumably with the intent of getting it to do something. This calls for the donning of a formal intellectual straitjacket because there is no shared context between human and machine. Our programmer must therefore reduce his original concept to an unambiguous, explicit, step-by-step form. He knows WHAT he wants the machine to do; he must now go through a complex process to tell it HOW.

This calls for surpassing cleverness (just ask any programmer), and has led to the development of a whole class of tools called languages. Computer languages shift some of the WHAT-to-HOW conversion onto the shoulders of the machine itself. That’s all they do.

Take BASIC, for example. Imagine you’re trying to make the machine do something useful like keep track of your online charges. You conceive a program that performs the task. You could probably express that program in two or three English sentences (especially to someone with whom you have a reasonable shared context), but when you’re done, you have two or three pages of code. Yet much of the dirty work still occurs beneath your awareness, for you have expressed your program in a language that, while clumsy, is still quite a bit less gritty than machine code. You say TOTAL = TOTAL + NEW and the computer somehow does it.

This gives rise to something we might call the WHAT-HOW spectrum. Looking again at Figure 2, we see WHAT up there at the exalted level of the human’s concept (“Like, uh, what I want this machine to do is keep track of the amount I’m spending on CB . . .”). Down at the other end, where you can feel the bits between your toes, can be found the HOW (“1001011100110110010110 etc.”)

Bridging the gap

There’s been a lot of talk during the last few years about the fact that peopie need to be “computer literate” to survive in the information society. That seems to ring of truth, but it should be only a temporary concern.

After all, if computer literacy is defined as the ability to speak on machines’ terms, then what we are really asking is that people learn how to bridge at least part of that vast WHAT-HOW gulf themselves. Why not make machines human literate instead?

That is exactly what is happening. Artificial intelligence research takes a number of forms, but one of the most central of them is devising the means for natural-language communication between man and machine. The effects? The addition of a shared context, the elimination of the formal intellectual straitjacket, and the ability to sit down and talk to a computer in order to tell it what to do.

We’re not quite there yet, but that’s the intent. The problem is actually solvable with present techniques as long as the “application domain” is sharply defined (when the boundaries of the problem are understood well enough to eliminate the many ambiguities of normal speech). Natural language techniques are thus finding their way into database query and expert systems — both of which tend to focus on a specific subset of the world’s body of knowledge.

This is not a trivial problem. There’s a lot of knowledge out there, with the print media alone contributing an estimated 200-million words per hour. This is becoming more and more interesting to the AI community because there are suddenly two major classes of applications that depend on it: expert systems and database systems.

Expert Systems

Expert systems represent the attempt to implement in a machine the accumulated knowledge about a human specialty. This is worthwhile for a number of reasons: experts are expensive and in short supply, and their knowledge is seldom documented well enough to breed new experts without years of effort. Textbooks in a field only codify part of the knowledge — the really key stuff like the art of good guessing and the intuitive recognition of the right approach — seldom makes it successfully into print.

The conjuring of artificial experts in certain fields has therefore become a high priority. Areas as diverse as medical diagnosis, large-scale integrated circuit design, strategic metals prospecting, interpretation of mass spectrogram data, and so on, have been attacked by “knowledge engineers” with resulting expert systems to aid or replace human workers in the fields. Whatever the cries of neo-Luddites, this is yielding impressive results: expert medical care where once it was unavailable, infinitely-patient intelligent support for demanding research activities, and quicker configuration of complex computer systems for customers. And more.

Doing this effectively places great demands on that man-machine communication link. Converting the years of experience possessed by a human expert into something useful to the machine calls for new kinds of software (and soon, hardware). Making that codified information accessible calls for a language that doesn’t require the user to become a computer wizard — ideally, either the natural human language with which the user is already quite familiar or a formal subset of that geared to his specialty.

Databases

Unlike expert systems, database systems are intended to serve as depositories of relatively static knowledge. An expert system might diagnose a patient’s infectious mononucleosis; a database system would instead provide access to the hundreds of journal papers written on the subject.

Yet they share some common problems. Information retrieval systems have no understanding of your problem. If you wander into a library and ask the librarian for information about giraffes, you will probably be asked to refine the question. “Do you want material about their habits, their habitats, or their physiology? How about a book of giraffe pictures? There’s a unicycle called the giraffe, and the latest National Geographic has an excellent article about giraffe poaching In Africa…”

An online database might well contain all this information plus a good deal more, but you have to know how to find the information — a problem not prohibitive, but one that prevents most of the world’s population from hopping on the network and answering all their questions.

Natural language techniques are being applied to database query first, as might be expected, in formal and well-defined applications. A software package called Intellect is now available for IBM systems that permits users to request data from customer databases in something approaching normal speech.

But even there the problems are complex. “Can you give me data on 370 sites using Release 3.4?”

Does the user want to know if the system can give him the data, or does he actually want the data? Does he want three hundred and seventy pieces of information, or is he interested in IBM 370 computers? Is the database system supposed to use Release 3.4 to give him the data, or is it looking for data about that piece of software? And so on.

The obvious problem here is the lack of that shared context. A human manager saying that to a human employee would be immediately understood; a computer has to dig around in some codified body of common knowledge (which doesn’t really exist yet) and weed out polite conversational superfluities, resolve ambiguous phrases, and determine what the real question is. It might even need to ask a question or two of its own to accomplish this: “What kind of data do you want, boss?”

Problems notwithstanding, extending all this to online networks leads to all sorts of interesting observations. Let’s see what we could accomplish with an intelligent information network.

The intelligent network

The biggest problem with online databases is that they have lots of knowledge but very little sense. A search for Pennsylvania State University won’t pick up a reference to Penn State, nor does the system understand that Steven K. Roberts might be the same fellow as Steve Roberts.

I performed a search this morning for technical papers about the ADA language, and even though I had been making computer-related inquiries for the previous half-hour, the system still offered material about an Ethiopian town called Ada, an orchid called Ada, Nabokov’s novels, and something having to do with Indiana’s educational system. Why? Because the system developed no contextual understanding from our interaction that it could then use to make assumptions about my information requests. “You want Ada? You got it, pal.”

A modicum of intelligence somewhere in the system would change all that. But said modicum is not an easy thing to create, for it would have to possess what we so blithely call “common sense” — a database that would put the others to shame with its size and diversity.

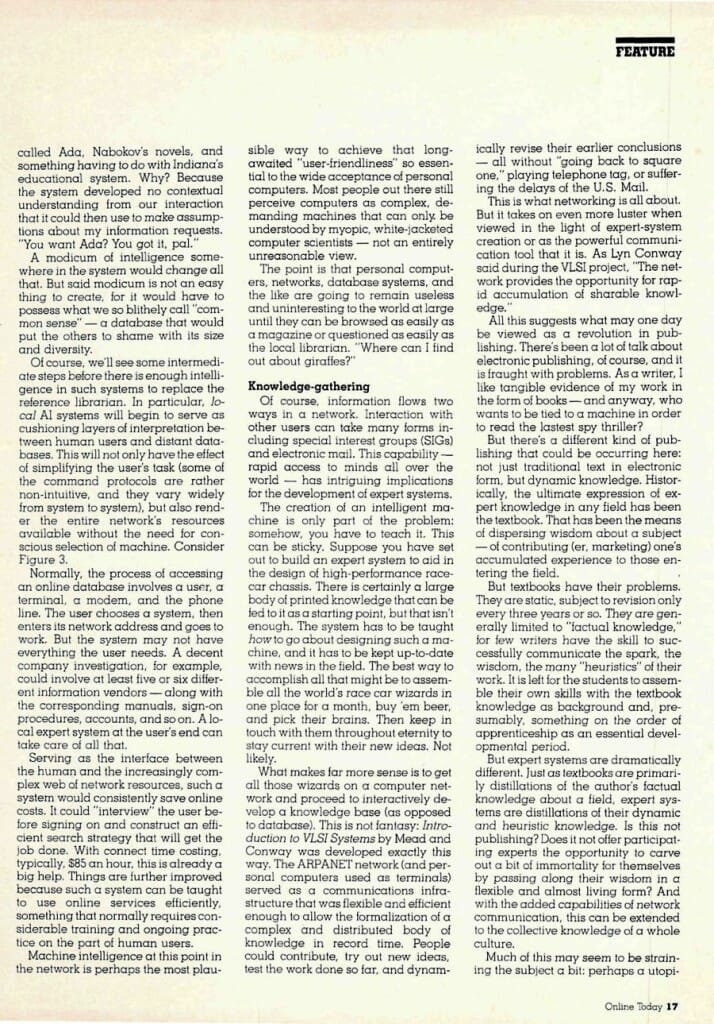

Of course, we’ll see some intermediate steps before there is enough intelligence in such systems to replace the reference librarian. In particular, local AI systems will begin to serve as cushioning layers of interpretation between human users and distant databases. This will not only have the effect of simplifying the user’s task (some of the command protocols are rather non-intuitive, and they vary widely from system to system), but also render the entire network’s resources available without the need for conscious selection of machine. Consider Figure 3.

Normally, the process of accessing an online database involves a user, a terminal, a modem, and the phone line. The user chooses a system, then enters its network address and goes to work. But the system may not have everything the user needs. A decent company investigation, for example, could involve at least five or six different information vendors — along with the corresponding manuals, sign-on procedures, accounts, and so on. A local expert system at the user’s end can take care of all that.

Serving as the interface between the human and the increasingly complex web of network resources, such a system would consistently save online costs. It could “interview” the user before signing on and construct an efficient search strategy that will get the job done. With connect time costing, typically, $85 an hour, this is already a big help. Things are further improved because such a system can be taught to use online services efficiently, something that normally requires considerable training and ongoing practice on the part of human users.

Machine intelligence at this point in the network is perhaps the most plausible way to achieve that long-awaited “user-friendliness” so essential to the wide acceptance of personal computers. Most people out there still perceive computers as complex, demanding machines that can only be understood by myopic, white-jacketed computer scientists — not an entirely unreasonable view.

The point is that personal computers, networks, database systems, and the like are going to remain useless and uninteresting to the world at large until they can be browsed as easily as a magazine or questioned as easily as the local librarian. “Where can I find out about giraffes?”

Knowledge-gathering

Of course, information flows two ways in a network. Interaction with other users can take many forms including special interest groups (SIGs) and electronic mail. This capability — rapid access to minds all over the world — has intriguing implications for the development of expert systems.

The creation of an intelligent machine is only part of the problem: somehow, you have to teach it. This can be sticky. Suppose you have set out to build an expert system to aid in the design of high-performance race-car chassis. There is certainly a large body of printed knowledge that can be fed to it as a starting point, but that isn’t enough. The system has to be taught how to go about designing such a machine, and it has to be kept up-to-date with news in the field. The best way to accomplish all that might be to assemble all the world’s race car wizards in one place for a month, buy ’em beer, and pick their brains. Then keep in touch with them throughout eternity to stay current with their new ideas. Not likely.

What makes far more sense is to get all those wizards on a computer network and proceed to interactively develop a knowledge base (as opposed to database). This is not fantasy: Introduction to VLSI Systems by Mead and Conway was developed exactly this way. The ARPANET network (and personal computers used as terminals) served as a communications infrastructure that was flexible and efficient enough to allow the formalization of a complex and distributed body of knowledge in record time. People could contribute, try out new ideas, test the work done so far, and dynamically revise their earlier conclusions — all without “going back to square one,” playing telephone tag, or suffering the delays of the U.S. Mail.

This is what networking is all about. But it takes on even more luster when viewed in the light of expert-system creation or as the powerful communication tool that it is. As Lyn Conway said during the VLSI project, “The network provides the opportunity for rapid accumulation of sharable knowledge.”

All this suggests what may one day be viewed as a revolution in publishing. There’s been a lot of talk about electronic publishing, of course, and it is fraught with problems. As a writer, I like tangible evidence of my work in the form of books — and anyway, who wants to be tied to a machine in order to read the lastest spy thriller?

But there’s a different kind of publishing that could be occurring here: not just traditional text in electronic form, but dynamic knowledge. Historically, the ultimate expression of expert knowledge in any field has been the textbook. That has been the means of dispersing wisdom about a subject — of contributing (er, marketing) one’s accumulated experience to those entering the field.

But textbooks have their problems. They are static, subject to revision only every three years or so. They are generally limited to “factual knowledge,” for few writers have the skill to successfully communicate the spark, the wisdom, the many “heuristics” of their work. It is left for the students to assemble their own skills with the textbook knowledge as background and, presumably, something on the order of apprenticeship as an essential developmental period.

But expert systems are dramatically different. Just as textbooks are primarily distillations of the author’s factual knowledge about a field, expert systems are distillations of their dynamic and heuristic knowledge. Is this not publishing? Does it not offer participating experts the opportunity to carve out a bit of immortality for themselves by passing along their wisdom in a flexible and almost living form? And with the added capabilities of network communication, this can be extended to the collective knowledge of a whole culture.

Much of this may seem to be straining the subject a bit: perhaps a utopian view of “the magic of computers.” Hardly. It’s happening now.

A medical expert system called MYCIN, developed at Stanford, easily outshines human diagnosticians in the identification and treatment of infectious blood diseases. MYCIN learned from human experts and is now used routinely in a number of medical settings. In keeping with the best laid plans of MYCIN men, the essence of that system has been applied to subjects as diverse as pulmonary diseases and structural engineering.

And, of course, we all know what the Japanese are up to these days! A massive national project is underway to outstrip the rest of the world in expert system development and produce a machine by 1990 capable of over one-hundred-million logical inferences per second. (See review of The Fifth Generation in this month’s book section.)

So this is not, after all, a breathless rhapsody about the glorious future of computers. It’s just an energetic assessment of where we’re going.

Let’s look at some of the side effects of all this. Artificial intelligence is a sensitive subject, if for no other reason than its name.

Social and legal issues

Artificial intelligence. Mull that phrase over in your mind for a while.

The term has an interesting effect on people — never mind the technology to which it refers. “Artificial intelligence.”

“That’s what we need around here!” some people joke, while others observe, “I don’t know — sounds scary to me.”

Some just ask, “What the hell’s that?”

Quoting author Pamela McCorduck, I reply, “You know, machines who think.” Interesting — some people get rather sensitive about that “who.”

Semantics aside, AI does raise some fascinating issues. Central among them is that burning but essentially irrelevant question: Can a machine think? This has been debated for centuries on grounds ranging from the technical to the ethical. The very notion of intelligent artifacts, it seems, threatens people. We’ve been outperformed physically by machines for years and have managed to accept that, but we still cling tenaciously to that last bastion of superiority that sets us above machines, beasts and minorities.

Yes. Minorities. It is interesting to peer back at history and consider the twisted logic that has been used to prevent the enfranchisement of various groups as members of society. “Women are incapable of true thinking,” went a popular 19th-century argument, backed by all kinds of nonsense having to do with their biology and “God-given” purpose. In more recent memory, blacks were denounced as being of essentially inferior intelligence, and this was then used as a justification for “keeping them in their place.” In short, intelligence is more a political term than a scientific one.

People who say “machines cannot think” are really saying, simply, “machines are not human.” True.

But the evidence to date — which I must stress is primitive in the extreme — strongly suggests that we will soon be able, as Turing once said, “to speak of machines thinking without expecting to be contradicted.” Those who state flatly that we are treading on sacred ground should carefully examine their reasons for believing so.

What do we mean, anyway, by “machine intelligence”? In the early days of the field, when AI was the exclusive domain of visionary academic tinkerers in a handful of research labs, it was considered a victory when it became clear that a computer could play championship chess. The media seized this as a metaphor for machine intelligence — but the ability to play chess implies nothing beyond just that. Even if the contraption can run circles around human chess players, it is still an embarrassingly narrow specialist. Accounting software can run circles around people, too, and nobody gets too upset about that.

Things start to look a little different, however, when some measure of generality enters the picture. To my knowledge, nobody has yet contrived a system that can glance over the morning headlines, pick out a story about the latest bickering in the Middle East and draw humorous parallels between that and Frank Herbert’s novel Dune. But it’s all a matter of degree. We can easily make up a list of things that present machines cannot do, label them “intelligence,” and sit back smugly to scoff at the feebleminded devices and enjoy our innate superiority.

All I can say here is that such an attitude has never worked for long. There is every reason to expect that machines of some sort will eventually be capable of most of the things we call “intelligence,” even if they go about it in ways that are dramatically different from our own. Yes, even creativity, insight, and (lightning strike me dead) consciousness.

All of which leads to a rather entertaining and bizarre line of reasoning. Earlier I spoke of the enfranchisement of various groups as members of society. With many loud battles, we have admitted women, minorities, fetuses, and those with “brain death” into our exalted ranks. We have also invented non-human members of society called corporations.

The middle manager is about to be as threatened by AI as the assembly-line worker is by robots. It’s not too hard to imagine the gradual emplacement of expert systems in companies — machines that follow the company line to the letter, interface directly with production systems, and are more concerned with the success of the business than their personal struggle up the career ladder.

These systems won’t be sitting around at individual desks, of course — they will simply network with other company computers in no visually-discernable hierarchy. And it doesn’t take too much imagination to conceive an entire firm that is managed by a sufficiently clever network of such electronic managers, all slaving away under a chief executive officer humming away in a tastefully decorated oaken cabinet down the hall.

Now. What happens when the firm gets involved in a lawsuit? Who’s responsible for that chemical dump, that severed arm in Hackensack, that clever elimination of a competitor by subtle price controls? Do you sue the computer? Why not? It might sue you if you hire away one of its resident wizards through some coup of wide-bandwidth network piracy.

Of course, if intelligent machines can sue and be sued (and this is not at all a frivolous notion — corporations do it, and “they’re” not human), then we have to suddenly start viewing them as reasonably autonomous members of society, with the attendant rights and responsibilities. (Interestingly, one of the active areas in expert-system research right now involves the development of a machine that can make legal decisions. Could we finally be seeing our first true circuit court?)

Anyway, such speculations suggest a number of issues that will doubtless fuel hot public debate for decades to come. My point here is that it is happening. We are looking at machines that are not just more robust versions of what we have now. AI has remained one of those quiet, incestuous academic communities for decades, with occasional flurries of media interest but very little commercial development. But recently — very recently — expert systems have reached a point where they are practical within sufficiently limited problem areas. By “practical” I mean profitable. This is a turning point in the industry, for AI research suddenly has a new source of energy: the bottom line.

The Fifth Generation is about to begin.

You must be logged in to post a comment.