Artificial Intelligence – Mini-Micro Systems

This article holds a sort of strange distinction in my memory… it was the first one I wrote while fully nomadic on my computerized recumbent bicycle, just a few days after leaving Columbus at the end of September 1983. Of course, I was also posting online about the trip itself and doing a series in Online Today magazine, but that was all rather self-referential. This one was pure trade-journal freelancing, and I slaved right up to deadline on my little Model 100 laptop, at last sending the article via CompuServe from my host’s kitchen phone line, late one night in Indianapolis.

After midnight. Gently, furtively, I removed my hosts’ telephone from their kitchen wall and plugged in the modular jack. I felt like someone in a Mission Impossible episode, hunched over a miniature computer system in a dark midwestern house, the family asleep and unaware of the satellite-borne data transmissions originating beside the Cuisinart. The article winged its way through the ether and came to rest on the spinning platters of a CompuServe disk drive somewhere in Columbus. In the morning, my editor at Mini-Micro Systems would sign on and transfer it to a Boston typesetting system, while Kacy would do likewise to make a copy for our files. Ain’t technology wonderful?

by Steven K. Roberts

Mini-Micro Systems

December, 1983

New applications raise social and legal issues

Artificial intelligence (AI) is the subfield of computer science concerned with symbolic reasoning, inference and problem solving—all subjects that historically have required “human” intelligence. Applications for AI techniques are moving beyond the “toy” problems that characterized early research. The technology has now become applicable to areas as diverse as very-large-scale-integration (VLSI) design, database query and medical diagnosis.

AI is drawing the public eye more than ever before, largely because of the Japanese Fifth Generation Project that is causing such alarm. With the sudden influx of funding from government and industry, the AI community now finds itself with more tools, people and energy to address the complex issues of natural language, vision and expert systems. In short, the field should no longer be viewed by practicing systems engineers as an interesting but remote topic.

Natural language

Perhaps the most complex and critical problem is the need to invest computer systems with the ability to deal efficiently with “natural” human language. Natural language can help machines interact much more successfully with computer-naive users. Why should people have to become “computer literate” to take advantage of system resources? It is much more sensible to make the computer “human literate.” In addition, there is a growing awareness that the representation of human knowledge by a machine requires something other than the old, reliable database techniques. In the AI community, arguments flourish in which formal logic is pitted against intuitive and “natural” paradigms as the foundation for a knowledge base. Formalisms such as predicate calculus are gradually yielding to the more flexible approaches embodied in a number of commercial products (MMS, October, Page 153).

But the problems remaining are many. Human speech is fraught with ambiguities, context dependency, unspoken “common-sense” information and the beliefs and goals of the speaker. Consider the following request that one might make to an assistant—or to a natural-language database-d query system: “Can you get me data on 370 sites using Release 3.4? I’d like them sorted by state.”

This statement would be quickly understood in context by the average human listener, especially one familiar with a company’s software products. But the problems facing a computer program that attempted to interpret such a command are staggering. “Can you get me data…?” A reasonable answer to this is “yes.” But the speaker is politely disguising a command, not investigating the machine’s capabilities, and the literal interpretation must be ignored. This requires, first of all, a general understanding of human communication style and social conventions.

Second, the request is rife with ambiguities: “…data on 370 sites….” Does the speaker want information about 370 installations, or is he interested in a type of computer? How is the software to decide without some contextual understanding of the situation? Resolving this requires the development of a shared context between speaker and listener, something that happens so smoothly with humans that it is seldom noticed. The more sharply limited the context, the less potential ambiguity present in any utterance.

Third, the speaker has issued an incomplete and vague command. What kind of data does he want? Company name and address? Status of account? Object dumps from the systems? In a real-life human situation, the listener would probably have a reasonably good idea of what the speaker has in mind, perhaps refining it with a cryptic comment such as “Updates, too?”

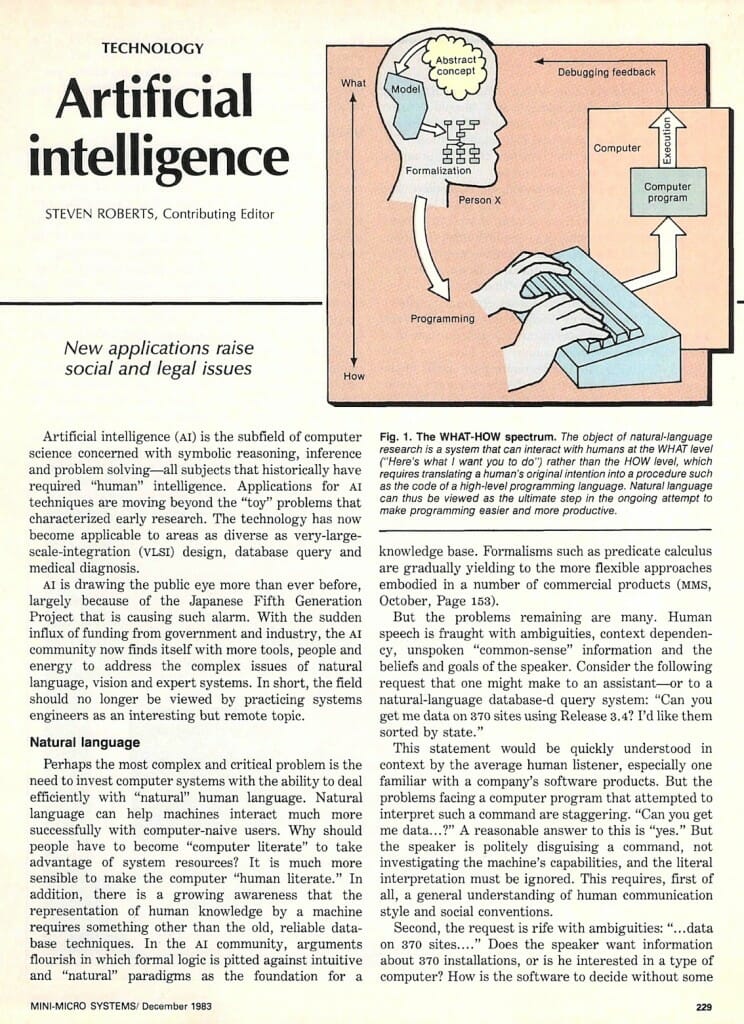

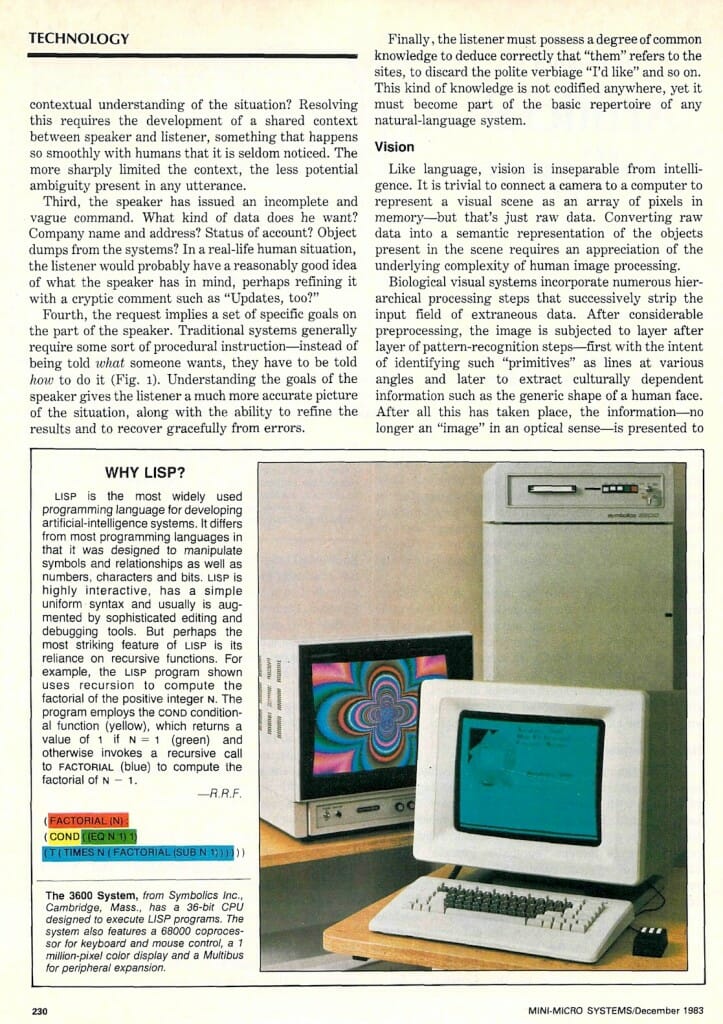

Fourth, the request implies a set of specific goals on the part of the speaker. Traditional systems generally require some sort of procedural instruction—instead of being told what someone wants, they have to be told how to do it (Fig. l). Understanding the goals of the speaker gives the listener a much more accurate picture of the situation, along with the ability to refine the results and to recover gracefully from errors.

Finally, the listener must possess a degree of common knowledge to deduce correctly that “them” refers to the sites, to discard the polite verbiage “I’d like” and so on. This kind of knowledge is not codified anywhere, yet it must become part of the basic repertoire of any natural-language system.

Vision

Like language, vision is inseparable from intelligence. It is trivial to connect a camera to a computer to represent a visual scene as an array of pixels in memory—but that’s just raw data. Converting raw data into a semantic representation of the objects present in the scene requires an appreciation of the underlying complexity of human image processing.

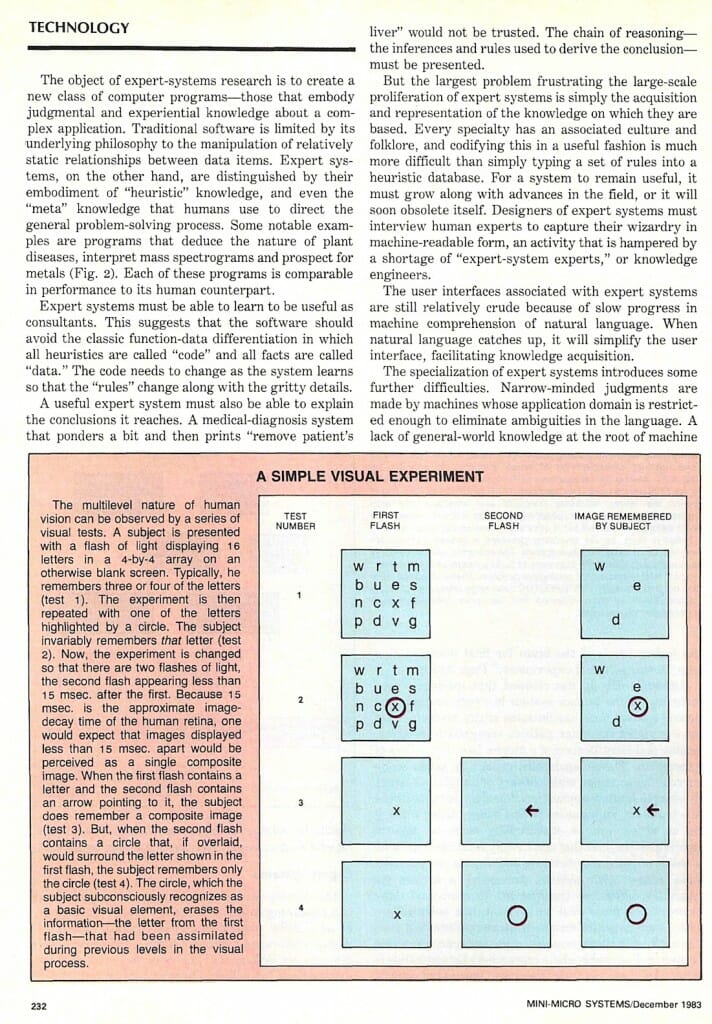

Biological visual systems incorporate numerous hierarchical processing steps that successively strip the input field of extraneous data. After considerable preprocessing, the image is subjected to layer after layer of pattern-recognition steps—first with the intent of identifying such “primitives” as lines at various angles and later to extract culturally dependent information such as the generic shape of a human face. After all this has taken place, the information—no longer an “image” in an optical sense—is presented to the higher levels of the brain for final interpretation (see “A simple visual experiment,” Page 232).

Although nobody has claimed that machine vision must model the human system in every respect, the human system does handle some sticky problems that have stymied computer pattern recognition: rotation, scaling and identification of a human face, regardless of expression. These capabilities cannot be achieved by correlating an image with a library of templates (except in sharply-limited application domains) because there are too many ambiguities in the image. Lines making up an object can be obscured by noise or uniform lighting, extra lines that don’t really exist can appear as a result of pattern or texture, and objects can partially hide behind other objects, presenting a surface not explicitly defined to the system. To compound these problems, humans deal with visual data holistically—processing an entire scene—while computers do it pixel by pixel. For these reasons, a general-purpose vision system will probably elude researchers for some time to come. In the meantime, the most impressive results should be obtained in highly-specialized robotics applications such as edge detection and image irradiance.

Expert systems

The development of systems that can be put to work in a consulting capacity is another major research area in AI. Unlike natural-language systems, which deal with a wide range of human verbal expression, expert systems are defined as application-oriented machines, placing them immediately in the realm of the practical.

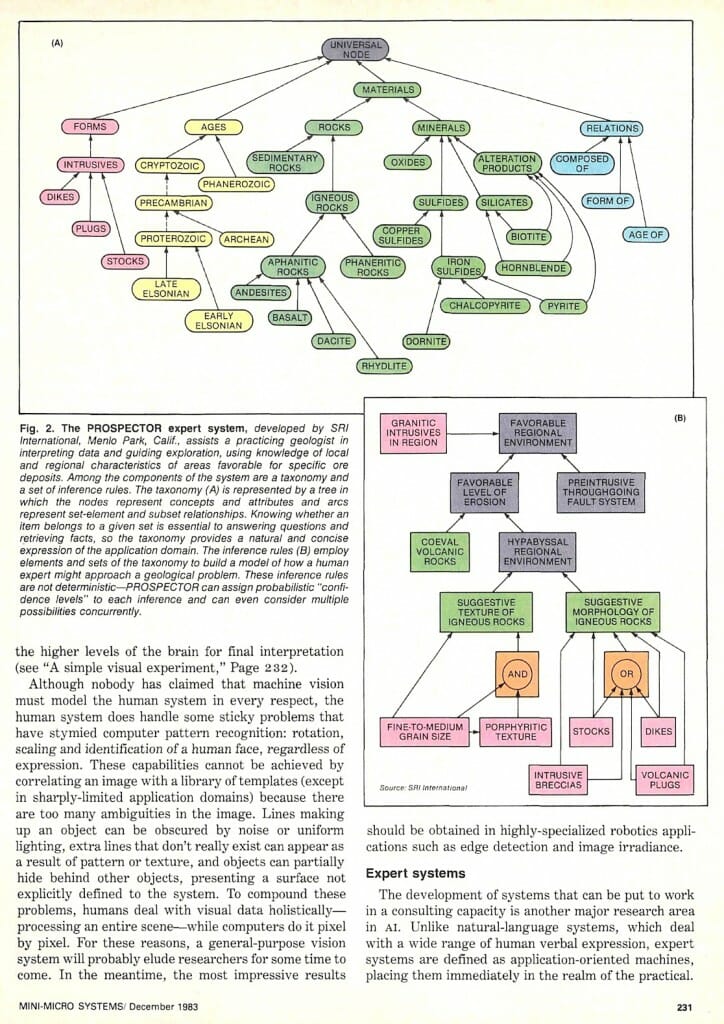

The object of expert-systems research is to create a new class of computer programs—those that embody judgmental and experiential knowledge about a complex application. Traditional software is limited by its underlying philosophy to the manipulation of relatively static relationships between data items. Expert systems, on the other hand, are distinguished by their embodiment of “heuristic” knowledge, and even the “meta” knowledge that humans use to direct the general problem-solving process. Some notable examples are programs that deduce the nature of plant diseases, interpret mass spectrograms and prospect for metals (Fig. 2). Each of these programs is comparable in performance to its human counterpart.

Expert systems must be able to learn to be useful as consultants. This suggests that the software should avoid the classic function-data differentiation in which all heuristics are called “code” and all facts are called “data.” The code needs to change as the system learns so that the “rules” change along with the gritty details.

A useful expert system must also be able to explain the conclusions it reaches. A medical-diagnosis system that ponders a bit and then prints “remove patient’s liver” would not be trusted. The chain of reasoning—the inferences and rules used to derive the conclusion—must be presented.

But the largest problem frustrating the large-scale proliferation of expert systems is simply the acquisition and representation of the knowledge on which they are based. Every specialty has an associated culture and folklore, and codifying this in a useful fashion is much more difficult than simply typing a set of rules into a heuristic database. For a system to remain useful, it must grow along with advances in the field, or it will soon obsolete itself. Designers of expert systems must interview human experts to capture their wizardry in machine-readable form, an activity that is hampered by a shortage of “expert-system experts,” or knowledge engineers.

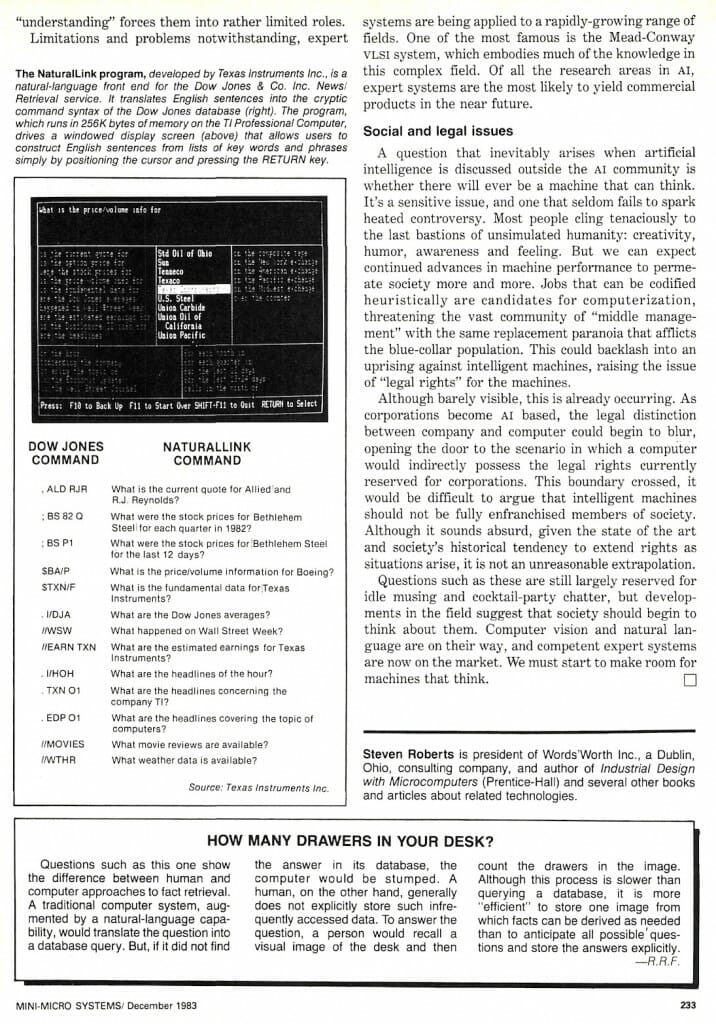

The user interfaces associated with expert systems are still relatively crude because of slow progress in machine comprehension of natural language. When natural language catches up, it will simplify the user interface, facilitating knowledge acquisition.

The specialization of expert systems introduces some further difficulties. Narrow-minded judgments are made by machines whose application domain is restricted enough to eliminate ambiguities in the language. A lack of general-world knowledge at the root of machine “understanding” forces them into rather limited roles.

Limitations and problems notwithstanding, expert systems are being applied to a rapidly-growing range of fields. One of the most famous is the Mead-Conway VLSI system, which embodies much of the knowledge in this complex field. Of all the research areas in AI, expert systems are the most likely to yield commercial products in the near future.

Social and Legal Issues

A question that inevitably arises when artificial intelligence is discussed outside the AI community is whether there will ever be a machine that can think. It’s a sensitive issue, and one that seldom fails to spark heated controversy. Most people cling tenaciously to the last bastions of unsimulated humanity: creativity, humor, awareness and feeling. But we can expect continued advances in machine performance to permeate society more and more. Jobs that can be codified heuristically are candidates for computerization, threatening the vast community of “middle management” with the same replacement paranoia that afflicts the blue-collar population. This could backlash into an uprising against intelligent machines, raising the issue of “legal rights” for the machines.

Although barely visible, this is already occurring. As corporations become AI based, the legal distinction between company and computer could begin to blur, opening the door to the scenario in which a computer would indirectly possess the legal rights currently reserved for corporations. This boundary crossed, it would be difficult to argue that intelligent machines should not be fully enfranchised members of society. Although it sounds absurd, given the state of the art and society’s historical tendency to extend rights as situations arise, it is not an unreasonable extrapolation.

Questions such as these are still largely reserved for idle musing and cocktail-party chatter, but developments in the field suggest that society should begin to think about them. Computer vision and natural language are on their way, and competent expert systems are now on the market. We must start to make room for machines that think.

Steven Roberts is president of Words’Worth Inc., a Dublin, Ohio, consulting company, and author of Industrial Design with Microcomputers (Prentice-Hall) and several other books and articles about related technologies.

You must be logged in to post a comment.