LISP Comes of Age

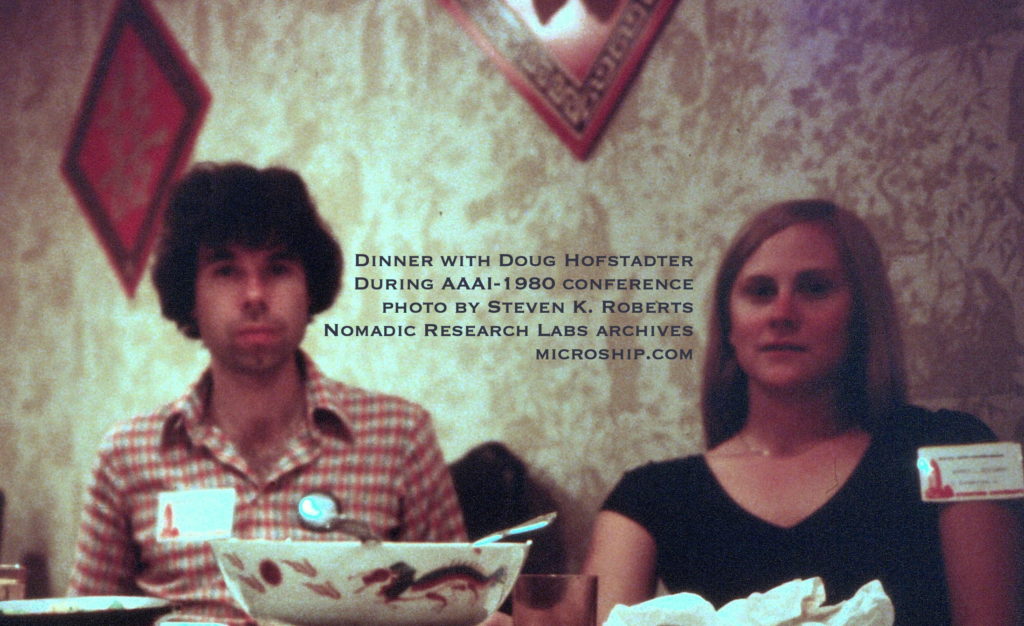

In August 1980, I flew from Ohio to attend the Artificial Intelligence conference (AAAI) held at Stanford University, leading to my Byte cover story about AI. It was a huge adventure, connecting me with some incredible people… I have fond memories of an evening with astounding brains at Xerox PARC after playing with the Dolphin and Dorado bitmapped machines that later inspired the Macintosh, hanging with computer-music geeks, a sparkling dinner with Doug Hofstadter and Scott Kim, meeting science fiction authors James Hogan and Vernor Vinge, stumbling into the FORTH subculture, diving into the wildly premature exuberance of the AI community, reconnecting with the gang at Godbout Electronics that had sparked my Cybertonics parts business 6 years earlier, and getting a heady glimpse of what life would have been like had I landed in Silicon Valley instead of languishing in the midwest. Gratuitous name-dropping and maudlin retrospective aside, it was an insane week, complete with a side adventure that involved catching a prowler breaking into Stanford dorm rooms and getting to know the campus cops (narrowly escaping a scary encounter, alone in a three-story dorm building, a few days before the conference began).

While the AI event was wrapping up, I found out that the first LISP conference was about to take place, so I urged my editor at Byte to extend my stay on campus. On a whim, they said to go for it, so I threw myself into the culture of the language as a newbie. (I also ran into the lead cop over at Tresidder Memorial Union and gave him the news. “Alright! Maybe we catch us another burglar!”)

Anyway, here is the resulting piece, which was never published (the editor wanted a detailed technical article on LISP programming, and this was more cultural-philosophical… though it informed my AI Programming section in the textbook I was writing). My thanks to Steve Orr for the 2018 scan/OCR of the manuscript I sent him in 1980, bringing it back to life after 38 years.

by Steven K. Roberts

Written for Byte Magazine

September, 1980

For two weeks at the end of August, the campus of Stanford University buzzed with excitement. That’s not a terribly unusual phenomenon for Stanford, of course, but on this particular occasion it represented a remarkable symposium of brilliant minds — all somehow involved with Artificial Intelligence (AI) and related provocative aspects of late-20th century computer science.

Two back-to-back events set the stage for this cerebral mass encounter. One, drawing over 1,000 researchers from all over the world, was the First Annual National Conference on Artificial Intelligence. The other, with nearly 300 attendees, was the 1980 LISP conference. The two events offered a rare opportunity to assimilate a broad-spectrum picture of current AI research.

Interest in Artificial Intelligence and its appropriate tools is hardly a new phenomenon, but it is fast reaching the “knee” of its exponential growth curve. Interests that were once the exclusive and esoteric domain of a small group of purely academic researchers are now turning the heads of industrial engineers, doctors, educators, random technologists, and hobbyists. It is becoming clear that computers, to truly be “intelligence amplifiers,” must themselves be intelligent enough to allow relatively unfettered interaction with humans.

It’s a subject that rather reliably triggers an emotional response — either that of animated enthusiasm, as was the case at Stanford, or that of paranoid dismay about the erosion of man’s innate superiority. “It’s scary,” some people say, as they contemplate neo-Luddite imagery of servitude to machine masters. But within our grasp is a technology of “knowledge engineering” – in which a combination of techniques enables systems to exhibit expert- level performance in any of a variety of domains.

These systems are becoming available as powerful tools (in such areas as medical diagnosis, database inquiry systems, computer-aided-design, earth resource mapping, and legal assistance) and, with their ability to efficiently integrate and use the accumulated wealth of human knowledge about a subject, offer us a vast new intellectual resource.

If this seems to have a bit of the “gee whiz” about it, consider: Human knowledge can be said to consist of two major classes of information. The first, which can be called factual knowledge, is made up of relatively “hard” data — textbook-style knowledge and other facts which comprise the most obvious bulk of libraries and other existing information resources. The second, which we’ll call heuristic knowledge, is a little harder to pin down. It includes judgement, intuition, relational concepts, and the art of good guessing which comes from experience in a field. It also includes knowledge outside the specific domain of an intellectual task: problem-solving strategies and a non-symbolic awareness of “how to think.” This has been called meta knowledge.

The single most noteworthy characteristic of present and future AI systems is that they incorporate not only the factual knowledge that limits “traditional” systems, but heuristic knowledge as well.

The implication here is that a system can be made to exhibit intelligent behavior without the ultra-high-speed exhaustive searches and simulation algorithms that characterize the classic brute-force approach. Through careful construction of databases that incorporate heuristic knowledge implicitly in their structure, access to factual knowledge and its relationships can be had in a fashion much more closely related to the way we humans think. The more heuristic knowledge a system has, the less it has to search — and the less bogged-down it will be when confronted with a highly associative task (such as image recognition) which we, being highly parallel systems, manage to accomplish with a minimum of conscious effort.

But until we have multi-million element “somatotopically organized, layered computers” (reference 7), we face a central problem in the creation of intelligent machines: computers are essentially serial devices. Operations that the brain seems to perform with the simultaneous activation of widely distributed resources, computers must perform through the painstaking execution of algorithms which in some fashion serve to accomplish the same end. Often the dichotomy is painfully obvious, but one of the things computers offer in compensation is speed.

Another thing is LISP.

The task of writing a program — be it in assembly level code, BASIC, PASCAL, or whatever — is traditionally characterized by a specific set of disciplines. In essence, the programmer must define some sort of algorithm which will accomplish the objective, then map that algorithm onto the available resources of the computer using a specific set of tools — the programming language. Conversion of an idea from its conceptual form into its coded form involves the acceptance of nearly brutal language restrictions which result in strict formalization of the process. The programmer starts with an idea of WHAT he wants the system to do, then spends hours or months describing HOW it should be done.

The generally-accepted complexity of this task has spawned some rather elaborate disciplines which reduce the probability of error. Among these is the body of precepts that has come to be known as “structured design.” You have probably witnessed bitter struggles over the relative merits of top-down and bottom-up design — each of which suggests a controlled approach to designing a program and, hopefully, making it work.

But there are problems with all this, problems which become particularly acute when the programming task at hand involves not the sequential handling of accounts or the polling of a communications channel, but instead involves the modeling of human cognitive processes. It is almost prohibitively difficult to write a program to associate data objects via their properties or to perform deductive reasoning when the only languages available require expression of the problem in a fashion that is completely alien to human thought. It can be done, but few have the stubbornness to try. The underlying difference between traditional programming and the type called for by problems of Artificial Intelligence is that the latter involves structural interrelationships and abstract symbols, whereas the former can generally be considered in numeric terms. Early attempts to cross this dichotomy resulted in mappings of symbolic problems onto more-or-less equivalent numeric ones, but in the process, most of the naturalness of problem expression was lost.

Enter LISP

The common programming languages can be neatly segregated into the two classifications of “interpreters” and “compilers,” depending upon the amount of source text bitten off at any given time by the language translator for conversion into machine code. LISP cannot be placed in either category without being forced uncomfortably into a mold.

Most languages operate on the general principle of inhalation of essentially undifferentiated strings of ASCII, detection of certain delimiters, identification of labels and reserved words, determination of an appropriate object-code segment or subroutine call, and either execution (if an interpreter) or addition of the new object statement(s) to an output file. LISP cannot be said to behave this way.

The typical programming environment imposes strict syntactical restrictions on the programmer, and presents a limited selection of somewhat primitive (in human terms) tools for use. Although sophisticated tools (macros, subroutines, libraries) can be constructed from these primitives, the language itself is unchanged — almost crystalline in its structural rigidity. APL has been compared to a diamond, which cannot be made into a larger diamond by the addition of a second one. LISP, in this context, is a ball of clay. Its characteristic of “extensibility” permits the language itself to grow without limit, providing the programmer with ever more sophisticated, custom-tailored tools.

The generation of self-modifying code in almost any programming language is rightly considered bad practice. It’s hard to debug, non-reentrant, and generally pathological (though sometimes of dazzling cleverness). But LISP makes no distinction between functions and data. It is, by its very nature, a dynamic and growing environment — changing its shape and texture to fit the task. Paradoxically, in what seems at first glance to be a hopelessly convoluted mess, LISP outshines ’em all in elegance and simplicity.

(Them’s fightin’ words in an industry wherein nearly a dozen competing computer languages face off regularly, backed by staunch, almost militant, supporters. Bear with me.)

Just about any language you can name possesses built-in functions for the manipulation of numeric data, with the scope of those functions generally well suited to the application domain of the language in question. But just about any language falls flat on its parser when confronted with the task of efficiently representing symbolic information — not in the sense of “CRLF is a symbol for 0D hex,” but in the sense of representational thought like: “DOG is a symbol for a carnivorous, four-legged domesticated mammal and PHYDEAUX is a symbol for one particular DOG.”

This has all sorts of implications. One of the most compelling, especially in light of the conferences that engendered this article, is the image of LISP as a fluid medium for the expression of problems in AI programming. Not only does it allow a process to be written in a relatively abstract way independent of representational encoding, but it provides, with its lack of function-data differentiation, a means for programs to encode new information by modifying or extending themselves. (Rather than try to pin this down here, I refer you to Reference 3.)

It is clear that this language is on a rather different level of abstraction from the more concrete languages that abound in the computer world. Sitting down at a LISP machine and building a program is a highly interactive task, wherein the desired system comes about incrementally. The designer shapes tools and data structures, tries things empirically, builds and modifies subassemblies, then gradually integrates everything into a “production system” or finished design. The system supports true debugging, handles details of storage management, and generally acts in a “friendly” fashion quite unfamiliar to those whose programming life has been spent banging monolithic aggregates of code into a form that not only compiles (or interprets, assembles, or whatever) without errors, but also works — or at least seems to for all the sets of conditions that can be conveniently tested.

A LISP system aids in the implementation of ideas; it doesn’t just translate a defined algorithm into machine code.

Presumably, the tone of all this rhapsodizing suggests some of the rationale behind the existence of a LISP conference which drew active participants from all over the place and spawned, in addition to much enthusiasm, a 247-page Conference Record. Since, by definition, LISP is not cast in concrete, its universe of users has been quite active in the development of new tools, new applications, and whole new ways of looking at computer systems.

In addition to the ongoing magic of interaction between active and energetic people, the conference provided a three day program of technical sessions. None of these were at an introductory level (a fact which sent the author scrambling to the bookstore for some intense pre-conference cramming), although there was a tutorial presented by John Allen of The LISP Company on the Friday preceding the start of the conference.

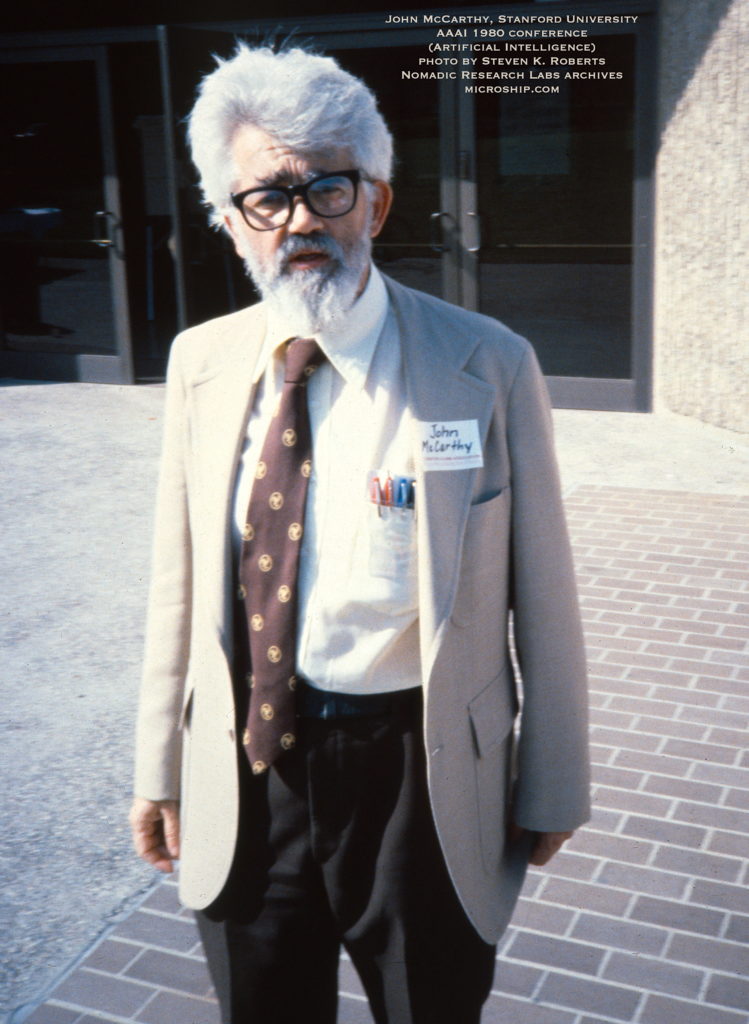

The program opened with an address by John McCarthy, the grand old man of LISP who, in 1958, gave birth to the original implementation while working with Marvin Minsky as a co-founder of the MIT Artificial Intelligence Project. He recognized at the time that the expression of structural interrelationships in a numeric computing environment was awkward, at best. Now, just over 21 years later, with LISP the second-oldest surviving computer language (after FORTRAN), he is still very much active in the field as the director of Stanford’s AI Lab. In his talk, he set the tone for much of the subsequent conference activities by noting the factors responsible for the longevity of the language and pointing out areas in which improvement is necessary.

There followed a series of talks, ranging from the mildly esoteric to the stratospheric, in which such widely diverse topics as lazy variable extent mechanisms, LISP in multiprocessing environments, and natural language processing were expounded upon in detail.

On the third and last day of the conference, there was a panel discussion on the general subject of Future LISP. The air sizzled with excitement as hotly contested characteristics of the language were alternately championed and denounced, often with wild gesticulation, by various members of this most amazing assemblage. The discussion was chaired by Bruce Anderson of Essex University, and the author’s notebook bears witness with its frantic scribblings to the wit and poignancy of the event:

- “LISP isn’t a language – LISP can never die. It’s a way of life.”

- “LISP is the art of putting parentheses around new ideas and saying they were there all the time.”

- “DWIM, not DWTWHMY (Do What I Mean, not Do What This Would Have Meant Yesterday).”

- “LISP’s core occupies some kind of local optimum in the space of programming languages.”

- ” … so close to the machine you can feel the bits between your toes… “

- Lots of Irritating Single Parentheses

Ah, live minds.

LISP Outside Academia

The predominance of VAX systems (and other, ahem, somewhat unaffordable manifestations of the computer designer’s art) in the world of LISP is no longer a reason to avert your gaze when you are face-to-face with an article like this one. As BYTE pages reveal regularly, a lot’s happening in the “micro” world, with 64K RAM cards selling assembled for less than the chip cost for 6K of 2102s back in the 8008 days. Among the endless implications of this is the realistic availability of memory-eaters such as LISP which run under CDOS and CP/M.

A forthcoming issue of BYTE will carry a survey article on the relative merits of some microprocessor LISPs, but we can note here that Cromemco, The LISP Company, and The Soft Warehouse all offer healthy versions which can be realistically put to work in a small system.

There exists, somewhere, a theoretical optimum in the interrelationship between human and machine. Each has areas of great competence; each likewise suffers at the other extreme. The ultimate computing machine may well turn out to be not the million-node multiprocessor optimized for sparse-matrix constructs, but instead a cheaper heuristic system very well integrated with its human host. Maybe not. But if not, we’ve a number of years to survive yet before brainlike systems make their debut outside a handful of well-funded research labs.

In any case, the choices confronting a human who desires “intelligence amplification” are many. There’s good ole BASIC, but for some reason it doesn’t inspire too many international conferences. It’s also the worst of both worlds — it’s not only awkward for symbolic representation, but contrary to prevailing notions of structure. There are a number of languages that don’t strike out quite so resoundingly, but of them all, LISP seems the prime candidate for personal computing in the most literal sense of the term.

REFERENCES

- Proceedings of The First Annual National Conference on Artificial Intelligence (AAAI 1980).

- Conference Record of the 1980 LISP Conference.

- Allen, John, “An Overview of LISP,” BYTE special issue on LISP, August, 1979.

- Winston P H, B K P Horn, LISP, Addison-Wesley.

- Charniak E, C K Riesbeck, D V McDermott, ARTIFICIAL INTELLIGENCE PROGRAMMING, Lawrence Erlbaum Associates.

- Cromemco LISP Manual

- Arbib M A, The Metaphorical Brain, Wiley Interscience

- Huyck P H, N W Kremenak, DESIGN & MEMORY, McGraw-Hill

ABOUT THE AUTHOR

Steven K. Roberts is a full-time writer and part-time microprocessor systems consultant currently living near Columbus, Ohio. He has been involved in industrial system design and home computing for six years, and has published over 30 articles about his work. His first book, Micromatics, was recently published by Scelbi, and he is now writing Industrial Design with Microcomputers for Prentice-Hall.

With the addition of Cromemco LISP to his home system, he is gradually giving in to an obsession with Artificial Intelligence – “now that personal computing is fun again.”

A later article researched at this and subsequent AI conferences, with emphasis on programming issues, is entitled To Teach A Machine and appeared in MIT’s Technology Review in January 1982.

You must be logged in to post a comment.